From Data to Dialogue: Reclaiming Learning in the Age of AI

The Conversational Classroom: Building Dialogue with AI

If the Generative Turn taught us that learning happens through creation, the next challenge is sustaining the conversation that creation begins.

Because what truly deepens learning isn’t just making something, it’s talking about it: explaining, questioning, revising, and refining our ideas in dialogue with others.

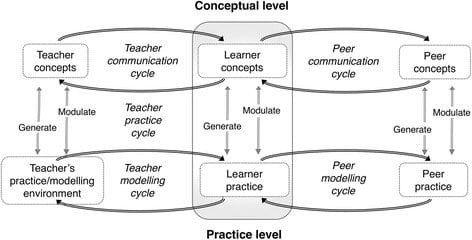

This is where Diana Laurillard’s Conversational Framework (2012) becomes a compass for teaching in the age of AI. It helps us see education not as the transfer of information but as a cycle of communication between teacher and learner, learner and peer, and increasingly, learner and machine.

The Essence of the Conversational Framework

Laurillard proposed that effective learning follows a dynamic dialogue:

Conceptual Exchange: The teacher presents ideas; the learner interprets and responds.

Practice and Feedback: The learner applies knowledge; feedback refines understanding.

Reflection and Adaptation: Both teacher and learner adjust their models of thinking.

This loop repeats across levels of abstraction, connecting theory to experience.

In this model, teaching is never static it’s a continuous conversation about meaning.

When we embed AI into this cycle, the goal isn’t to replace either voice but to extend the conversation by giving learners more opportunities to articulate, test, and reconstruct understanding.

Example 1: AI as Reflective Partner

In a psychology course, students might discuss ethical dilemmas using an AI companion trained on professional guidelines.

Rather than offering “right” answers, the system poses counter-questions:

“What ethical principle might conflict with that choice?”

“How would this decision change if the participant were a minor?”

The AI doesn’t instruct; it provokes reflection.

Students refine their reasoning in response and teachers later review transcripts to identify moments of cognitive dissonance worth unpacking in class.

Here, AI acts as a conversation starter, helping students rehearse reasoning before bringing it into the social space of discussion.

Example 2: The Adaptive Feedback Loop

In a chemistry lab simulation, an AI coach monitors experiment logs. When a student adjusts variables inconsistently, the system intervenes:

“You changed temperature but not concentration. What outcome do you expect from that?”

The student must articulate a prediction before proceeding. After observing results, the AI prompts:

“Did the outcome match your expectation? Why or why not?”

This back-and-forth keeps the learner in a reflective stance, turning trial and error into deliberate experimentation; dialogue as inquiry.

Example 3: Scaling Feedback Without Losing the Human

One of the hardest challenges in higher education is maintaining rich feedback at scale.

Imagine a writing course where AI provides first-pass formative feedback framed in conversational terms:

“You’ve argued strongly for economic reform here. What counter-arguments might strengthen your conclusion?”

The AI’s output isn’t an endpoint.

It creates talking points for tutorials. Teachers then step in to extend the conversation guiding tone, nuance, and judgment while saving hours on mechanical corrections.

Instead of replacing feedback, AI helps teachers spend their energy where it matters most: interpreting meaning and mentoring growth.

The Teacher’s New Role: Dialogue Designer

Within this framework, teachers become designers of conversation. They decide:

What kinds of questions the AI should ask.

Where to hand the conversation back to human discussion.

How to assess reflection, not just response.

This design work demands the same intentionality as lesson planning aligning each AI interaction with learning outcomes and ethical values. It ensures that technology remains a facilitator of thinking together, not a dispenser of canned replies.

The Ethical Edge of Dialogue

Dialogue brings responsibility. When AI participates in conversation, it also shapes perspective. We must therefore attend to:

Bias: Which voices are amplified or silenced by the model’s training data?

Transparency: Do students know when they’re engaging with an algorithm?

Privacy: Who owns the dialogue traces that record their thinking?

Responsible AI dialogue isn’t just about civility; it’s about agency ensuring learners remain authors of their own ideas. As Laurillard reminds us, genuine learning dialogue “requires that each participant has a voice that can influence the other” (2012, p. 86). The same must hold true when one voice is synthetic.

Wrapping Up: From Data to Dialogue

Across this series, we’ve moved from diagnosing the problem to imagining solutions:

In Part 1, we saw that AI often misreads learning because it overlooks emotion, motivation, and metacognition.

In Part 2, we embraced Generativism, using AI to foster creation and reflection rather than compliance.

And now, in Part 3, we’ve grounded those ideas in conversation which are the heartbeat of authentic education.

The next frontier isn’t smarter algorithms. It’s smarter conversations; ones where teachers, learners, and AI systems co-construct understanding through curiosity, feedback, and care.

Because education’s power has never come from data alone.

It has always lived in dialogue.

References

Laurillard, D. (2012). Teaching as a design science: Building pedagogical patterns for learning and technology.Routledge.