Moving from Policy to Practice

Helping Students Reflect on Their AI Use

If you’ve read any recent university AI policies, you’ve probably noticed they’re good at telling students what to do, disclose, verify, reflect, but rarely how to do it.

Most institutional frameworks now include AI disclosure requirements. Students are expected to declare which tools they used, show how outputs were checked, and reflect on the learning impact. Yet, in practice, many students (and teachers) are left wondering what that actually looks like.

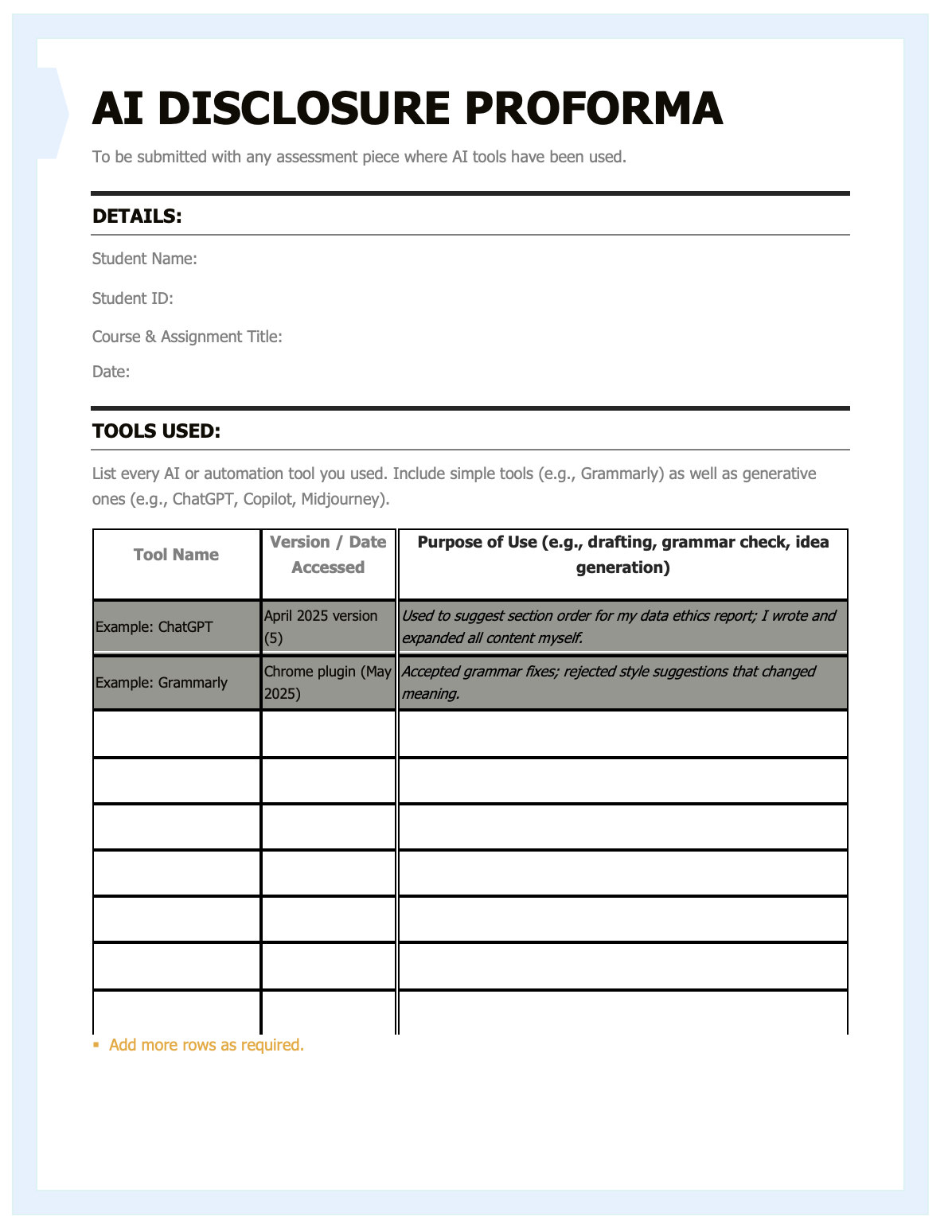

That’s why in Chapter 5 of The AI Educator (in press) I’ve included a simple resource to help: the AI Use Disclosure Pro Forma. It’s a one-page document students can attach to any assessment where AI tools played a role.

Rather than adding bureaucracy, it aims to make learning visible. Students list tools, describe how they interacted with AI, and reflect on what they learned in the process. The structure encourages transparency without fear by helping students own their learning choices and demonstrate integrity in a world where AI is everywhere.

At its core, this form guides students through three key questions:

What did I use, and why?

How did I verify or adapt what the AI produced?

What did I learn about the process (including its limits or biases)?

These are not compliance questions; they’re reflection prompts. They help students articulate how AI supported their thinking and where their own judgment came in.

When we talk about ethical AI use, this is what it looks like in action: transparent, traceable, and teachable.

You can download the AI Use Disclosure Pro Forma here and use it in your courses. Encourage students to complete it alongside drafts, design logs, or reflections. It works equally well for essays, projects, and creative work.

Because rules tell students what to disclose.

Reflection teaches them why it matters.

If you use the form as is or altered please let me know!!